RGBlack: AI causes history to repeat itself

WPP’s Racial Equity Programme has supported AKQA’s RGBlack initiative in a new phase of development. Tim Devine, of WPP’s AKQA, explains

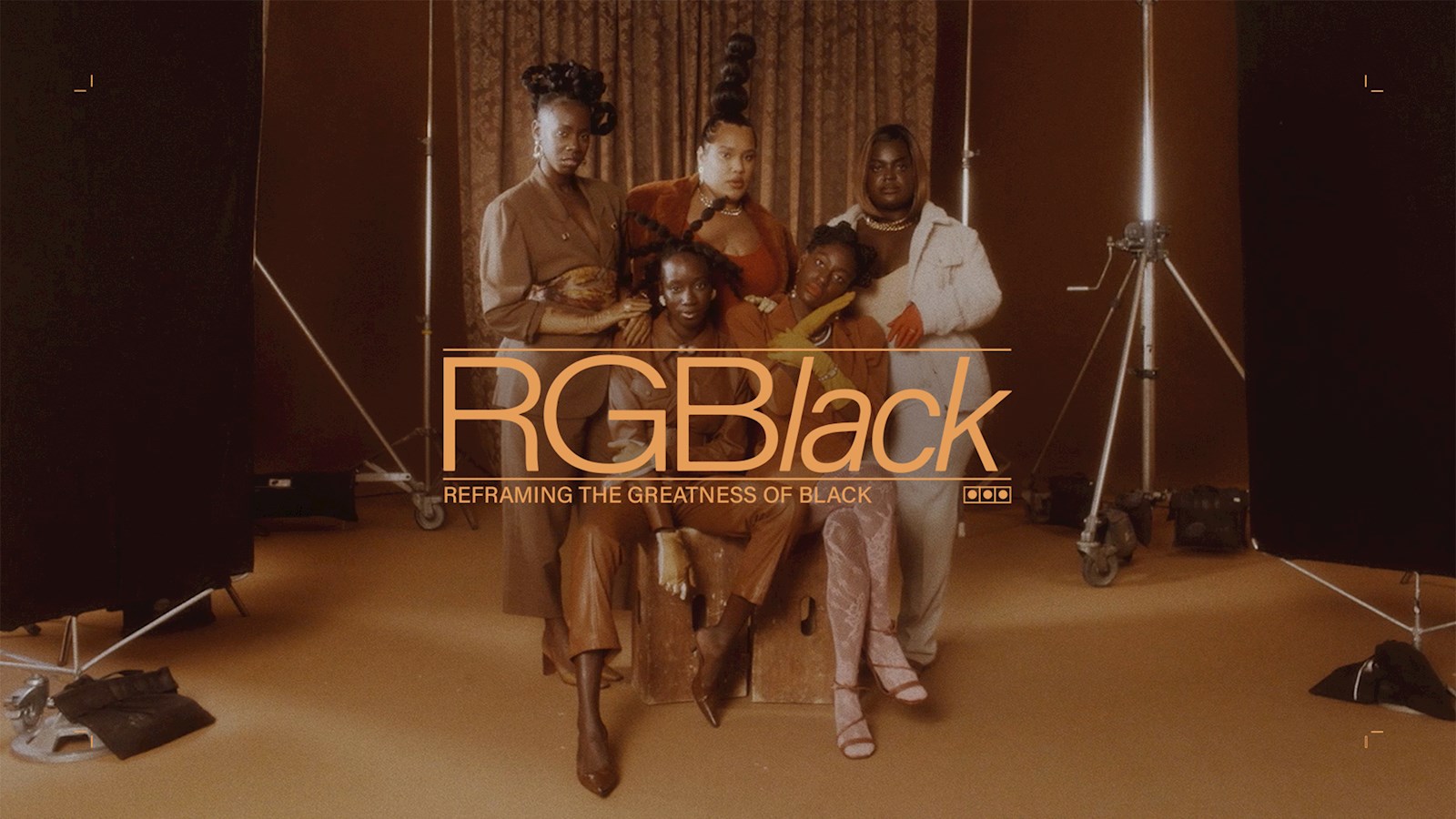

Following the success of the RGBlack initiative – a platform that addresses the unsatisfactory way in which film technology captures all skin tones – AKQA is using funding from WPP’s Racial Equity Programme to develop a new standard of image capture for digital creators.

AKQA is striving to mitigate the impacts of coded bias in AI-powered tools by raising awareness of the dangers present in existing AI tools in relation to dark skin tones.

It all began with the Shirley Card

Undoubtedly, generative AI is presenting a fresh challenge, but let’s not forget how AKQA’s RGBlack platform came about. It all started with the ‘Shirley Card’.

The Shirley Card was a photo of a white woman accompanied by colour references – as well as light exposure and density information – that photographers and designers used to balance photographic outputs to meet the needs of the dominant target market at the time. Updated Shirley Cards – which attempted to address this bias – were never widely adopted in the 1990s because they coincided with the emergence of digital photography. Hence, the principles entrenched in the original Shirley Cards continued to dominate.

Clearly, the Shirley Card has rendered anyone with darker skin tones misrepresented in photography. This is the wrong that AKQA has sought to correct – and has succeeded in correcting – through the development of its RGBlack platform.

“We wanted to include people with lived experience in our platform development process as they know how they want their skin tone to be represented,” says Devine. “Let's not make any assumptions that hair, makeup and lighting are all the same for all skin types. That is why we put together a platform to give creators and content producers the tools and information to be able to upgrade and understand how these things might be better represented, and to dissolve the legacy of the misrepresentation of dark skin tones.”

But it is not that straightforward. While building this platform, it became apparent that we are in a new era of bias.

“In the process of building this platform we realised that we're entering a phase of history repeating itself,” says Devine. “Generative AI is using existing photographs to generate models for AI, and those models are biased in relation to darker skin tones and people on the margins – or in relation to anyone else who’s not predominant in the data sets. These sorts of impacts accrue when training AI tools, and that creates structural inequality in the tools themselves. That becomes a real problem.”

Sounds like a problem for RGBlack

The problem is ripe for the minds that built the RGBlack platform originally. And the problem associated with bias entrenched in generative AI is a significant one. Devine points to the feedback loop inherent in the development of generative AI. This, in turn, reinforces bias in society that we simply cannot ignore. The bias becomes stuck in a loop, and creators must draw on their own judgement and scepticism to bring balance.

“There's a term in AI that is used for the moment, whether that's now or in the future, when values are locked into the data. It’s called ‘values lock’. Yet, I think everyone would agree that, over the last 10 years, our values have changed. There's been more progress around equity, equality and representation. And we want to see that continue. The risk is that these models, and these tools, will start to hinder that process of transition towards a more equitable society,” says Devine.

Of course, generative AI has come on in leaps and bounds over the last couple of years – at least in terms of public awareness and ease of use. It has become accessible and captures the imagination, but it is trained on what has gone before and, what happened before. When it comes to the representation of black skin tones, that was not equitable.

“These generative AI tools are powerful,” says Devine, “and there is real risk in further marginalising some of us who are already poorly represented, and then reinforcing structural inequality through poor data and modelling.”

He says it’s going to be hard to weed out the bias. “Doing that will bring its own bias. Who gets to say what is ideal? Who has that power? That's the conundrum. That’s what we should be thinking about now,” he says.

Devine reminds us that we need to be aware of the pitfalls – whether we’re knowingly using generative AI or not. “At what point do we start to make people explicitly aware that these tools are biased and will cause harm to marginalised communities?” he asks.

“So, our goal is to complicate the narrative around generative AI to make sure that people ask the question – when they go to use these tools – might this reinforce structural inequality?” says Devine. “Shirley Cards are a parallel concern to what is happening now with generative AI, but AI has far greater scale and we should be even more concerned about that.”

published on

14 April 2023

Category

More in Experience

Let’s add audio for visually impaired audiences

How to make advertising more accessible for visually impaired audiences

The Future 100: wellbeing, humanity, emotion and tech

This annual trend spotter – by WPP’s VML – gives us the context for the new normal for marketing in 2024.

Activating sports events – the ultimate balancing act

WPP Sports Practice takes a look at the art of timing for sports event activation